Explore the intricacies of web scraping with Python using advanced techniques to handle dynamic content and multi-page structures. Efficiently extract, clean, and prepare 5K@ADA race results data for analysis with Selenium and BeautifulSoup.

Key Points

- Tools and Setup: Using Selenium and BeautifulSoup for web scraping.

- Dynamic Content: Handling and interacting with dynamic web pages.

- Data Extraction: Extracting data from HTML tables and managing pagination.

- Data Cleaning: Normalizing and preparing data for analysis.

Introduction

Web scraping is a powerful tool for extracting data from websites, but it often involves overcoming challenges posed by dynamic content and multi-page structures. We explore a complex web scraping project that involves using Selenium for handling dynamic web content and BeautifulSoup for parsing HTML. We'll use race results from the 5K@ADA, an event held in June 2024, as our example.

The 5K@ADA posts general participant data with no sensitive information, such as age, email, or precise location. The site has no restrictions on automated data collection methods. We'll walk through the process of setting up our tools, handling tables, managing pagination, and preparing our data for analysis.

5K@ADA Event

The 5K@ADA Virtual Challenge brings together people around the world and American Diabetes Association (ADA) annual conference attendees to emphasize the need for increased physical activity to help prevent diabetes and its complications. This awareness activity provides participants with the opportunity to raise public awareness about the importance of a healthy lifestyle in preventing and controlling diabetes.

Download Race Results Data Program

Setting Up Web Scraping Tools

Tools Used – Selenium and BeautifulSoup:

Selenium allows interaction with dynamic content, such as clicking buttons and navigating pages, while BeautifulSoup is excellent for parsing and extracting information from HTML documents.

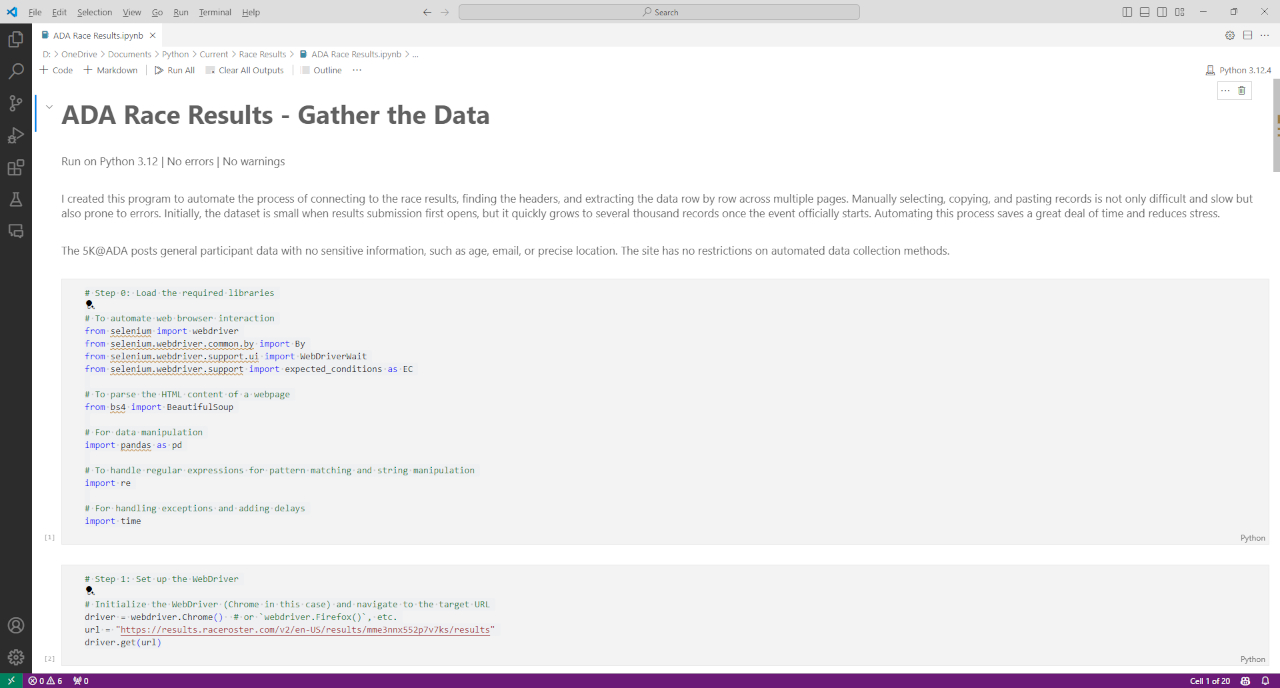

Initial Setup:

Start by loading the required libraries and setting up the Selenium WebDriver, essential for automating web interactions. Once the WebDriver is configured, use it to load the target webpage and extract the basic HTML content. This setup allows for beginning to parse the page and identifying the data needed to scrape.

# Step 0: Load the required libraries

# To automate web browser interaction

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

# To parse the HTML content of a webpage

from bs4 import BeautifulSoup

# For data manipulation

import pandas as pd

# To handle regular expressions for pattern matching and string manipulation

import re

# For handling exceptions and adding delays

import time# Step 1: Set up the WebDriver

# Initialize the WebDriver (Chrome in this case) and navigate to the target URL

driver = webdriver.Chrome() # or `webdriver.Firefox()`, etc.

url = "https://results.raceroster.com/v2/en-US/results/mme3nnx552p7v7ks/results"

driver.get(url)Extracting and Cleaning Data

Functions:

Define functions to extract and clean the data. These functions handle the extraction of data from HTML tables, ensuring that each table's structure is correctly interpreted, and the data is stored appropriately.

# Step 2: Define a function to clean headers

# This function removes unwanted text from headers and trims whitespace

def clean_header(header):

return re.sub(r"Click on any of the columns headers to apply sorting", "", header).strip()# Step 3: Define a function to extract data from the current page

def extract_data_from_page():

# Use BeautifulSoup to parse the loaded page

soup = BeautifulSoup(driver.page_source, 'html.parser')

# Extract data

results = []

headers = []

# Find the table body

table = soup.find('table')

if table is None:

raise ValueError("No table found on the page.")

# Find the table headers

thead = table.find('thead')

if thead:

headers = [clean_header(header.get_text(strip=True)) for header in thead.find_all('th')]

# Extract the data rows from the table

tbody = table.find('tbody')

if tbody:

for row in tbody.find_all('tr'):

cells = row.find_all('td')

row_data = [cell.get_text(strip=True) for cell in cells]

results.append(row_data)

return headers, resultsHandling Tables:

Tables on web pages can vary significantly in their structure. Use BeautifulSoup to locate the tables and extract the relevant rows and columns. Ensure that all table headers and data rows are correctly identified, parsed, and stored.

Data Cleaning:

Once the data is extracted, normalize and prepare it for analysis. This involves ensuring consistency in data formats, such as converting time strings to a standard format and handling any discrepancies in the data.

Handling Pagination

Pagination Loop:

One of the significant challenges in web scraping is handling multi-page results. Implement a loop that navigates through each page, extracting data continuously. This involves interacting with the 'Next' button on the page to load subsequent results.

# Step 6: Loop through the pagination to extract data from subsequent pages

while True:

try:

# Find the "Next" button using aria-label attribute

next_button = WebDriverWait(driver, 10).until(

EC.element_to_be_clickable((By.XPATH, "//button[@aria-label='Next']"))

)

# Click the "Next" button using JavaScript to ensure it works

driver.execute_script("arguments[0].click();", next_button)

# Wait for the next page to load by checking the table presence

time.sleep(3) # Adding a sleep to ensure page loads completely

WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.CSS_SELECTOR, "table"))

)

# Extract data from the new page and add to all_results

page_headers, page_results = extract_data_from_page()

if not headers:

headers = page_headers

all_results.extend(page_results)

except Exception as e:

# If there is no "Next" button or another issue, break the loop

print(f"Stopping pagination: {e}")

# If pagination stops with no message, there are no more pages

breakChallenges:

Managing pagination requires ensuring that each page loads correctly before attempting to extract data. Include checks in the code to verify that the page content is fully loaded and handle any potential errors gracefully.

Data Normalization and Preparation

Time Column Normalization:

Having a consistent 'Time' format is important for accurate analysis. Some entries are in MM:SS format and some are in HH:MM:SS format. Convert all time entries to a standardized format, making it easier to perform calculations and visualizations.

# Normalize the Time column to ensure a consistent format

def normalize_time(time_str):

parts = time_str.split(':')

if len(parts) == 2: # mm:ss

return f"00:{time_str}"

elif len(parts) == 3: # h:mm:ss

return time_str

else:

return time_str # Return as is if not in the expected format

df0['Time'] = df0['Time'].apply(normalize_time)Column Conversion:

To prevent issues with data type conversions, explicitly convert certain columns to strings or other appropriate types. This avoids problems where data might be interpreted incorrectly by analysis tools.

# Ensure Place columns are treated as text by prepending an apostrophe

df0['Gender Place'] = df0['Gender Place'].apply(lambda x: f"'{x}")

df0['Age Group Place'] = df0['Age Group Place'].apply(lambda x: f"'{x}")

df0['Age Group Gender Place'] = df0['Age Group Gender Place'].apply(lambda x: f"'{x}")Saving Cleaned Data:

Finally, save the cleaned data to a new CSV file, ready for further analysis or visualization.

Finalizing and Saving Data

Saving Data:

Save the cleaned and normalized data to a CSV file for further review and cleaning. This step ensures that the data is ready for subsequent analysis.

Closing WebDriver:

Properly close the Selenium WebDriver to release resources and avoid potential issues.

# Step 9: Close the WebDriver

driver.quit()Python Code (Jupyter Notebook) at GitHub

Clean the Race Results Program

Importing Packages

Tools Used:

Import necessary packages like `pandas` for data manipulation and `os` for handling file operations.

Custom NA Values

Setting Custom NA Values:

Set up custom NA values to handle missing data accurately, ensuring that 'NA' (Country Code for Namibia) is not misinterpreted as missing data.

# Define custom NA values, excluding 'NA' to make sure that Country Code NA (Namibia) is not interpreted as missing data

custom_na_values = ['N/A', 'NaN', 'null', '']Data Cleaning and Preparation

Handling Custom NA Values:

Apply these custom NA values to the dataset, ensuring that missing data is handled correctly.

#Load specific columns, ignore the default NA values and use the custom ones

df0 = pd.read_csv("ADA_race_results.csv", usecols=['Name', 'Country', 'Time', 'Age Group', 'Enrollment'], keep_default_na=False, na_values=custom_na_values)Dropping Duplicate Rows:

Find and drop duplicate rows, as some participants submitted their results twice.

Dropping the Name Column:

Drop the name column, as it's no longer needed after identifying duplicates.

Add Columns for Race Name and Year:

Add columns for the year and the race name, anticipating future race data to be added to the 2024 dataset.

Rename the Country Column:

Rename the Country column to Country Code to simplify joining with the Countries file in Tableau. Reorder the columns as desired.

Saving the Data

Saving Cleaned Data:

Save the cleaned data to a CSV file for creating visualizations in Tableau.

Python Code (Jupyter Notebook) at GitHub

Summary

We've explored the complexities of web scraping using Python, focusing on the use of Selenium and BeautifulSoup to handle dynamic content and pagination. By breaking down the process into manageable steps and addressing the challenges faced, we've demonstrated how to efficiently extract, clean, and prepare data for analysis. Whether you're a beginner or an experienced developer, we hope this guide has provided valuable insights and encouraged you to explore further enhancements in your web scraping projects.

Frequently Asked Questions

How can I ensure my web scraper adheres to ethical data usage standards?

Ensure ethical data usage through:

- Transparency: Be clear about your intentions and data use.

- Consent: Obtain consent if scraping personal data.

- Compliance: Follow legal and regulatory guidelines.

- Respect: Do not misuse or misrepresent the data collected.

What are some common pitfalls in web scraping and how can I avoid them?

Common pitfalls include:

Ignoring Robots.txt: Always check robots.txt.

- Not Handling Errors: Implement robust error handling.

- Overloading Servers: Use rate limiting.

- Hardcoding Selectors: Use more flexible selectors.

- Data Cleaning: Ensure data is cleaned and validated.

What are some common HTTP status codes I might encounter, and how should I handle them?

Common HTTP status codes:

- 200 OK: Successful request.

- 403 Forbidden: Access denied; check permissions or use different headers.

- 404 Not Found: URL not found; check the URL for correctness.

- 429 Too Many Requests: Rate limit exceeded; implement delays.

- 500 Internal Server Error: Server issue; retry after some time.

What are some alternative methods to web scraping for data collection?

Alternatives to web scraping include:

- Public APIs: Use APIs provided by the website to access structured data.

- Data Dumps: Look for publicly available data dumps.

- Third-Party Data Providers: Use data services that aggregate and provide data.

How can I use machine learning to enhance my web scraping process?

Integrate machine learning for:

- Data Extraction: Use NLP models to extract structured data from unstructured text.

- Pattern Recognition: Identify patterns in data for better parsing.

- Anomaly Detection: Detect and handle anomalies in scraped data.