# Import packages

# For data manipulation

import numpy as np

import pandas as pd

# for displaying and modifying the working directory

import os as os

# For working with datetime objects

from datetime import datetimeAbstract

Managing continuous glucose monitor (CGM) data efficiently becomes increasingly challenging as datasets grow larger. This post details a streamlined approach for preparing a base dataset to facilitate CGM data analysis. Key steps include optimizing the data download process, filtering relevant information, formatting and enriching the dataset, and preparing it for visualization. By implementing these methods, you can reduce redundancy, ensure data integrity, and create a foundation for meaningful analysis and visualization.

Key Points

- Challenge: Daily downloads of the full dataset became impractical as the dataset grew larger.

- Solution: Store historical data in a CSV file and append only new data, reducing download time and storage needs.

- Filtering: Retain only relevant columns and rows (e.g., glucose readings) while discarding unnecessary metadata and non-glucose entries.

- Formatting: Standardize columns, extract additional fields, and add a Treatment column to enrich the dataset.

- Validation: Check for missing dates, remove duplicates, and ensure data consistency.

- Output: Save processed data in two formats—complete history for records and a 90-day subset for visualization in Tableau.

Introduction

Managing large datasets is a common challenge for anyone working with continuous glucose monitor (CGM) data. Initially, I downloaded the entire dataset daily, which worked fine when the dataset was small. However, as it grew, this approach became inefficient. I needed a solution to handle historical data without re-downloading it each time. This led to the development of a streamlined process for managing, cleaning, and preparing CGM data for analysis.

Key Objectives

- Streamline Data Downloads: Retain historical data in a CSV file and download only new data daily.

- Filter and Clean Data: Keep relevant columns and rows for analysis while removing unnecessary information.

- Format and Enrich Data: Standardize formats, identify missing or duplicate entries, and add meaningful columns.

- Save and Prepare for Visualization: Store processed data and prepare subsets for visualization tools like Tableau.

Step 1: Downloading Only the Needed Data

Downloading the entire dataset daily quickly became impractical. By storing historical data in a CSV file, I can append only the latest readings to the existing data. This approach reduces both download time and storage requirements.

Key actions:

- Download the new data.

- Load the existing history file.

- Append the new data to the history file, ensuring no duplicates.

Step 2: Filtering Relevant Columns and Rows

The downloaded data includes columns and rows not needed for analysis. To clean the data:

- Select only the relevant columns: Index, Timestamp, Event Type, and Glucose Value.

- Retain rows where Event Type equals EGV (Estimated Glucose Value). This removes metadata and non-glucose entries, leaving only the glucose readings.

# Load the new data from Clarity df0 = pd.read_csv("Clarity_Export_Smith_Jeffrey.csv", usecols=['Index', 'Timestamp (YYYY-MM-DDThh:mm:ss)', 'Event Type', 'Glucose Value (mg/dL)']) # Keep only the rows where Event Type is EGV (estimated glucose value) df0 = df0[df0['Event Type'] == 'EGV']

Step 3: Formatting and Enriching the Data

The raw data requires formatting for consistency and usability:

- Renaming Columns: Rename Timestamp to DateTime for clarity.

# Rename and format Timestamp column df0['DateTime'] = pd.to_datetime(df0.pop('Timestamp (YYYY-MM-DDThh:mm:ss)'), format='%Y-%m-%dT%H:%M:%S') - Extracting Additional Fields: Create columns for Date and Time from the DateTime column, formatting the time as AM/PM.

# Create a Date column from the DateTime column df0['Date'] = df0['DateTime'].dt.date # Create a Time column from the DateTime column df0['Time'] = df0['DateTime'].dt.time - Adding a Treatment Column: Classify each reading as treated or untreated using predefined conditions.

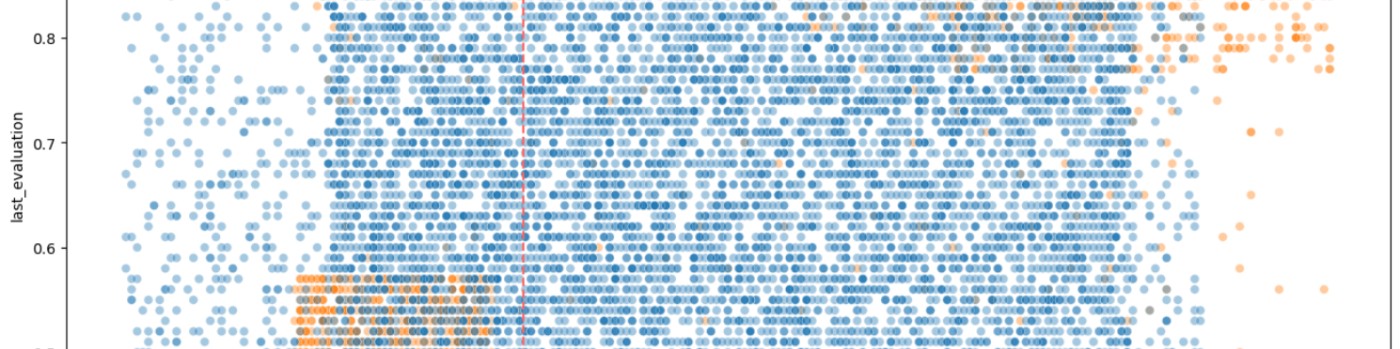

# Create a column for Treatment df0['Date'] = pd.to_datetime(df0['Date']) # Convert Date from text to datetime format conditions = [ (df0['Date'] > '2025-02-25'), (df0['Date'] > '2024-06-18'), (df0['Date'] > '2024-01-30'), (df0['Date'] > '2023-07-18'), (df0['Date'] > '2023-02-28'), (df0['Date'] > '2023-01-31') ] choices = [ 'Mounjaro 15', 'Mounjaro 12.5', 'Mounjaro 10', 'Mounjaro 7.5', 'Mounjaro 5', 'Mounjaro 2.5' ] df0['Treatment'] = np.select(conditions, choices, default='Untreated') - Ensuring data integrity:

- Check for and report missing dates in the range.

- Identify and remove duplicate rows.

# Check for missing dates using numpy set difference missing_dates = np.setdiff1d(complete_date_range, unique_dates) # Display missing dates, if any if len(missing_dates) > 0: print("Missing dates:") print(missing_dates) else: print("No missing dates, all dates are consecutive.") # Find and count duplicate rows duplicate_count = df2.duplicated().sum() print(f"Number of duplicate rows: {duplicate_count}") # Delete duplicate rows df2 = df2.drop_duplicates()

Step 4: Saving Processed Data

Processed data is saved in two formats:

- Complete History: All data is stored in a CSV file for record-keeping.

- Recent Data for Visualization: Only the most recent 90 days are saved separately for use in Tableau visualizations.

Conclusion

Building a base dataset for CGM data is a critical first step in preparing for meaningful analysis and visualization. By optimizing the data download process, filtering unnecessary information, and ensuring data integrity through formatting and validation, this approach creates a streamlined, reliable foundation for further exploration. Saving both complete history and recent data subsets ensures flexibility, whether maintaining records or generating visual insights in tools like Tableau.

Frequently Asked Questions

How do you handle missing data when gaps are identified in the date range?

If gaps in dates are identified, I download the data for the missing dates or leave those dates out if I don't have the data.

What specific Python libraries are required to execute this code?

Key libraries include:

- pandas for data manipulation and analysis.

- numpy for numerical operations.

- datetime for handling date and time fields.

- These libraries are readily available in Python's ecosystem.

Can this method be adapted for data from other glucose monitoring systems?

Yes, this approach can be adapted as long as the other systems provide data export options. You may need to modify column selections, filtering logic, or data formats to match the specific structure of the new dataset.

Why is only the most recent 90 days of data saved for visualization in Tableau?

The 90-day limit keeps the dataset manageable for Tableau and ensures that the visualizations remain focused on recent trends.

How do you ensure that the data cleaning process does not unintentionally remove valid readings?

Carefully define filtering criteria, such as retaining rows where Event Type is EGV. Validate the filtered dataset by comparing the number of rows before and after filtering, ensuring all legitimate readings remain.