Abstract

Explore advanced Python techniques for efficient data retrieval, focusing on handling large datasets, optimizing API calls, and ensuring data integrity. Whether you're working in healthcare analytics, financial modeling, or large-scale data science, these strategies will enhance your workflow and analysis capabilities.

Key Points

- Efficient data retrieval using advanced Python techniques.

- Strategies for handling large datasets and optimizing API performance.

- Best practices for data cleaning and preparation.

- Essential tools for developers and data analysts in high-demand fields.

Introduction

Python offers powerful tools for data retrieval, particularly when working with large datasets or APIs. This example uses more advanced Python techniques to handle larger datasets, optimize API calls, and efficiently manipulate data. These are crucial strategies for data analysts and developers working in fields that require quick access to comprehensive datasets, such as healthcare analytics, financial modeling, or large-scale data science projects.

Step 1: Import the Required Packages

Before we begin, we need to import the necessary Python libraries that will help us retrieve and manipulate the data.

# Import packages

# For data manipulation

import pandas as pd

# For making HTTP requests

import requests

# For adding delays in between HTTP requests

import time

# For creating in-memory text streams

from io import StringIO as sio

# For creating and displaying progress bars

from tqdm import tqdmStep 2: Setting Up the API Connection and Retrieving Total Record Count

The next step is to establish a connection with the API and retrieve the total record count using the JSON format. Knowing the total number of records helps in planning the pagination strategy. The code also allows for a graceful exit if it's not able to retrieve the count.

Dataset: The Respiratory Virus Hospitalization Surveillance Network (RESP-NET) conducts population-based surveillance for laboratory-confirmed hospitalizations associated with COVID-19, respiratory syncytial virus (RSV), and influenza.

# Define the API endpoint

url = "https://data.cdc.gov/resource/kvib-3txy"# Function to get total record count and handle errors

def get_total_count():

try:

response = requests.get(f"{url}.json", params={"$select": "count(*)"})

response.raise_for_status() # Check if the request was successful

data = response.json()

return int(data[0]['count'])

except requests.exceptions.HTTPError as http_err:

print(f"HTTP error occurred: {http_err}") # Print the HTTP error

except requests.exceptions.RequestException as req_err:

print(f"Error occurred during the request: {req_err}") # Print other request errors

except Exception as err:

print(f"An error occurred: {err}") # Print any other errors

return None # Return None if there was an error# Get total record count

total_count = get_total_count()

if total_count is not None:

print(f"Total record count: {total_count}")

else:

print("Failed to retrieve the total record count.")Step 3: Implementing Pagination to Retrieve All Data

Now that we have the total record count, we can implement a loop that will read 1,000 records at a time via CSV requests until the entire dataset is retrieved. As with the code for getting the record count, this code allows for a graceful exit in the event of an error.

# Initialize parameters

params = {

"$limit": 1000, # Number of records per request

"$offset": 0 # Starting point for records

}# Initialize an empty list to store the data

data_list = []

# Loop to fetch all records with progress bar

with tqdm(total=total_count, desc="Downloading Records", unit="record") as pbar:

while params['$offset'] < total_count:

# Make the request to the API

response = requests.get(f"{url}.csv", params=params)

# Check if the response is successful

if response.status_code != 200:

print(f"Error: Received status code {response.status_code}")

print(f"Response: {response.text}")

break

# Attempt to parse the response as CSV

try:

data = pd.read_csv(sio(response.text))

except Exception as e:

print(f"Error: Unable to parse response as CSV: {e}")

break

# Check if there are records in the response

if data.empty:

print("No data to fetch.")

break

# Append the data to the list

data_list.append(data)

# Update the progress bar

pbar.update(len(data))

# Increment the offset for the next request

params['$offset'] += params['$limit']

# Delay between requests to handle rate limiting

time.sleep(1) # Delay for 1 secondStep 4: Concatenating and Analyzing the Data

Once all the data is retrieved, we can combine it into a single Pandas DataFrame using pd.concat for further analysis and processing.

# Concatenate all data into a single DataFrame

df0 = pd.concat(data_list, ignore_index=True)

# Display the final record count

print(f"Total records retrieved: {len(df0)}")Step 5: Cleaning the Data

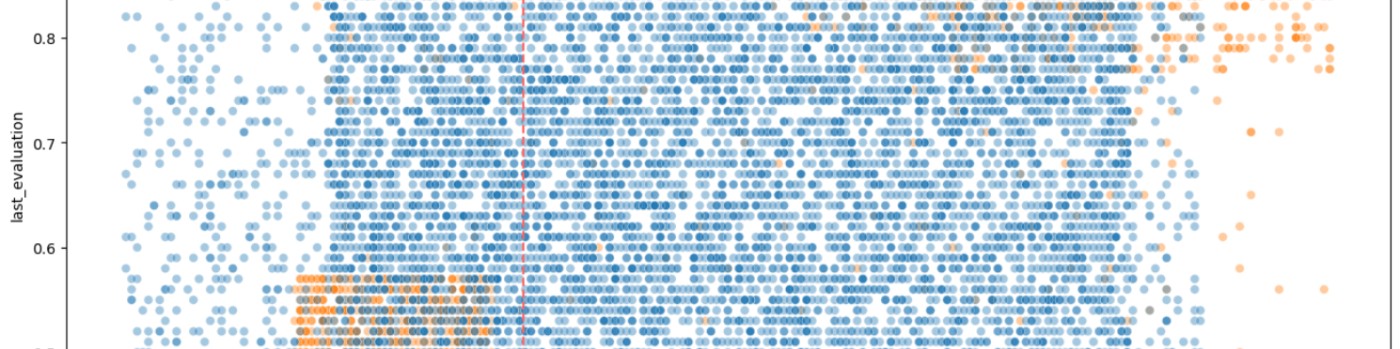

Data cleaning is an essential step in preparing a dataset for analysis. Depending on the dataset, this can involve removing duplicates, handling missing values, or converting data types. The data cleaning is for demonstration only. There are currently no plans for visualizations in Tableau.

# Check for missing values in the dataframe

df0.isnull().sum()# Drop rows with missing values

df0.dropna(inplace=True)# Specify the age_group rows to delete.

# Create a list of age groups to drop (they overlap with other age groups)

agegroups_to_drop = ['0-4 years', '18-49 years', '5-17 years', '65+ years', '75+years']

# Create a mask to filter the rows

mask = df0['age_group'].isin(agegroups_to_drop)

# Drop the rows that are not in the list

df0.drop(df0[mask].index, inplace=True)# Drop the rows where weekly_rate and cumulative_rate are 0

df0 = df0[df0['weekly_rate'] != 0]

df0 = df0[df0['cumulative_rate'] != 0]# Convert the 'Week Ending' column to datetime format

df0['Week Ending'] = pd.to_datetime(df0['Week Ending'])Step 6: Saving the Cleaned Data

After cleaning the data, it's important to save it for future use. Exporting the DataFrame to a CSV file makes it available for further analysis without having to go through the download process again.

# Save the cleaned data to a CSV file

df0.to_csv('cleaned_respnet_data.csv', index=False)Summary

Advanced data retrieval with Python, especially when dealing with large datasets or APIs, requires a combination of strategic planning and efficient coding practices. By understanding the strengths of different data formats—such as using JSON for metadata and CSV for bulk data—you can optimize your data retrieval processes. And implementing effective data cleaning and saving practices ensures that your datasets are ready for analysis.

Whatever your field of work, mastering these techniques will significantly enhance your data analysis capabilities and improve the efficiency of your workflows.

For more in-depth tutorials on working with APIs in Python, you might find these resources helpful:

Python code (Jupyter Notebook) at GitHub

Frequently Asked Questions

What are some common pitfalls to avoid when working with APIs?

Common pitfalls include not handling rate limits properly, ignoring API versioning (which can lead to breaking changes), not validating API responses for errors, and not securing sensitive information like API keys. To avoid these, always follow best practices for error handling, validation, and security, and keep your code up-to-date with API changes.

What are some best practices for managing API rate limits?

API rate limits restrict the number of requests you can make in a given time period. To manage these limits, implement a delay between requests using `time.sleep()`, check the headers of the API response for rate limit information, and use exponential backoff for retries. Some APIs also provide specific endpoints to check your current usage against the rate limit.

What are the security considerations when working with sensitive data in Python?

When handling sensitive data, ensure that API keys and tokens are stored securely, such as in environment variables or using a secrets manager. Use HTTPS to encrypt data in transit, and consider encrypting sensitive data at rest using libraries like `cryptography`. Implement logging to track data access without exposing sensitive information.

How can the retrieved and cleaned data be visualized effectively, even if not using Tableau?

You can visualize data using Python libraries like `matplotlib`, `seaborn`, or `plotly`. These libraries offer a range of visualizations, from basic plots to interactive dashboards. For example, `plotly` allows you to create interactive visualizations that can be embedded in web pages or shared with stakeholders.

Are there alternative methods for storing the cleaned data besides CSV, such as databases or cloud storage?

Yes, alternatives include using databases like SQLite, PostgreSQL, or cloud-based storage solutions like AWS S3, Google Cloud Storage, or Azure Blob Storage. You can also store data in binary formats like Parquet or HDF5, which are more efficient than CSV for large datasets. Libraries like `pyarrow` or `h5py` can help with this.