Abstract

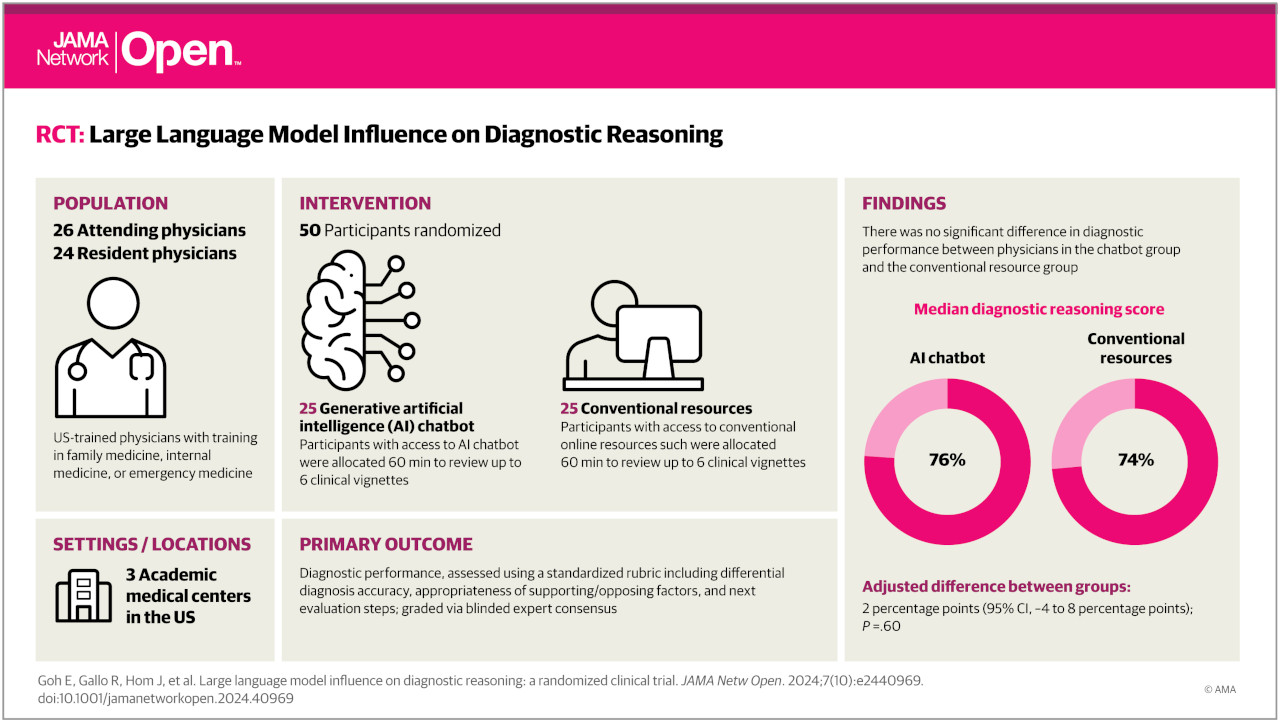

A recent study published in JAMA Network Open examined whether AI chatbots, specifically large language models (LLMs) like ChatGPT-4, improve physicians' diagnostic reasoning. The randomized clinical trial found that while the LLM alone outperformed physicians in diagnostic accuracy, providing physicians with access to the AI tool did not significantly enhance their performance compared to conventional resources. The findings highlight the potential of AI in diagnostics but underscore the need for better integration, clinician training, and further research to optimize AI's role in medical decision-making.

Key Points

- A JAMA Network Open study assessed the impact of AI chatbots on physicians' diagnostic reasoning.

- The trial involved 50 physicians diagnosing clinical vignettes with or without LLM assistance.

- The LLM alone achieved a 92% diagnostic accuracy, outperforming both physician groups.

- Physicians using the AI tool had similar accuracy (76%) to those using traditional resources (74%).

- AI's strength lies in processing large data sets and reducing cognitive biases.

- Experts emphasize the need for better AI integration, clinician training, and model refinement.

- Future research should explore how AI can consistently support and enhance medical decision-making.

A recent study published in JAMA Network Open investigated whether large language models (LLMs) like ChatGPT-4 improve diagnostic reasoning among physicians. The randomized clinical trial, conducted across multiple academic medical centers, found that providing physicians with access to an LLM did not significantly enhance their diagnostic accuracy compared to using conventional resources.

- The study involved 50 physicians (attendings and residents) who were given clinical vignettes to diagnose, with one group having access to an LLM and the other using traditional resources.

- Interestingly, the LLM alone outperformed both physician groups, achieving a median diagnostic reasoning score of 92%, compared to 76% for physicians using the LLM and 74% for those using conventional resources. This suggests potential for AI in diagnostics but highlights the need for better integration and training.

- Experts commenting on the study noted that AI's strength lies in processing vast amounts of data consistently, potentially reducing cognitive biases. However, they cautioned that AI systems depend entirely on their training data and lack the nuanced understanding of human interaction critical in complex diagnoses, particularly in mental health.

Why this matters: While AI holds promise for improving diagnostic accuracy and efficiency, this study suggests that simply providing access to LLMs isn't enough. Effective integration requires careful consideration of how these tools interact with clinicians' expertise, as well as ongoing refinement of AI models and user training. Further research is needed to define diagnostic accuracy more clearly and explore how LLMs can best support clinicians in making more consistent and accurate diagnoses.

Reference: Goh E, Gallo R, Hom J, et al. JAMA Netw Open. 2024;7(10):e2440969. doi:10.1001/jamanetworkopen.2024.40969.

Link: https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2825395